Power bills in the UK are starting to skyrocket, and the costs of self-hosting are going up as a result. The average persons bills are going up pretty fast, and I suspect they don't have a server in their cupboard on 24/7.

For a while now, I've wanted to swap out the CPU in my server. When I designed it, I had intended to get a 3900X - an absolute beast of a CPU with plenty of cores to pass through to VMs etc. Fast forward to purchasing time, I scaled myself down to a 3700X to save costs - and looking I'm very glad I did. Fast forward again a year or so and my CPU idles around 1% usage. As it turns out, my workload isn't very CPU-bound, as I'm not doing tonnes of compute or using tonnes of VMs unnecessarily (almost everything I do runs in LXC instead for reduced overhead).

The biggest problem with the 3700X for me, besides the amount of resource going unused, is the lack of a GPU. AMDs performance CPUs don't have GPUs in, and so any GPU intensive tasks like transcoding were offloaded to the CPU. Computers still need some form of GPU, so I had a terrible GT 710 in there to get a terminal onto a screen (which is about all it's good for nowadays). For how rare it happened, I didn't really notice an issue, but some Jellyfin streams would start feeling sluggish on poor connections, and take a while to switch quality settings.

So I decided it's new CPU time!

#Which CPU?

I'm someone who does enjoy a good rabbit-hole (I've nerd-sniped myself on a number of occasions), and CPU research is quite the hole! My goals were simple: Reduce power consumption, but gain a GPU - perfectly doable I thought. My 3700X server idled around 80W, which compared to other people's servers is pretty frugal, but I thought could do better.

After hearing Alex adore his i5-10400, and the power of QuickSync, I was leaning towards an Intel build. A 10400 was reasonably priced, as were its motherboards, and I'd seen those Intel CPUs underclocking themselves very nicely to save some power. The 11400 and 12400 were also valid offerings, but were slightly more expensive, especially in the motherboard department. But, it seemed unnecessary to have to replace CPU and motherboard, just in the name of power draw. The more I spent, the longer it'd take to break even. When spending money in the short term to break even in the long, the return on investment is critically important. It's fairly pointless spending £100 to save £1 per year, as it'll take your entire life to see the return.

And then, one night, it hit me - AMD APUs! Alongside AMDs "X" series, are the lesser-known "G" series. With the G standing for Graphics, these CPUs do come with an integrated GPU - and a fairly decent one at that. By staying with AMD, it meant I would only need to change my CPU, reducing my upfront cost and the need to re-platform. After a little research, I settled on the 5600G.

The 5600G has the same TDP as my 3700X, but being a more mid-tier chip means it shouldn't need to prove itself and run quite so warm all the time. It also had a much lower base clock, which should help in that department, too. My B550 motherboard natively supports the 5000 series CPUs (after a BIOS update), so I could keep the board I had, which not only saved costs but reduced the time to swap it out. I was originally set on the 4600G, a slightly older CPU with similar benefits, but ended up spending the tiny amount more in the name of the forbidden "futureproofing". The 4600G may have been fine, but the newer Zen 3 architecture should bring some performance and efficiency benefits - which is the name of the game after all.

The GPU in the 5600G was also quite a plus. Whilst the power draw was my main concern, getting a GPU was a nice addition. I don't do much transcoding or GPU-intensive work, but having something at least semi-capable comes in handy. The 5600G has a "Cezanne"-era GPU, which according to this surprisingly hard to find Wikipedia page, supports every common encoding format besides the new shiny AV1 - which I can absolutely deal with.

And so, I bought one - used. Mine cost just over £130 including shipping and arrived in less than 2 days.

#Installation

Anyone who has swapped a CPU knows it's not a super complicated task, made even easier by the fact it was the only component I needed to change. As I mentioned, after a simple BIOS update, my motherboard supported 5000 series CPUs, and had graphics outputs on the rear IO.

<note>

A BIOS update was only an option for be because I had a compatible CPU already installed. If you don't, you may need to purchase a supported one temporarily to update the BIOS.

</note>

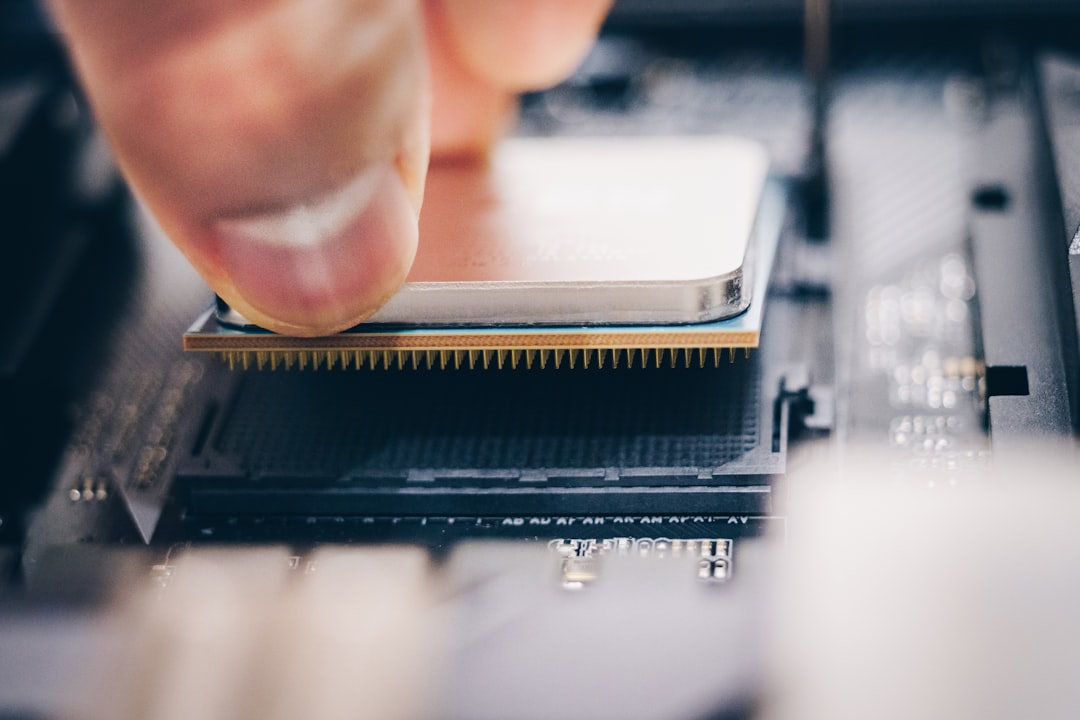

Installation was a breeze. Unscrew the cooler (Dark Rock Pro 3, massive overkill I know but it's very quiet), clean its contact area, disconnect the previous CPU, connect the new CPU, apply some thermal paste, connect the cooler and we're good! Start to finish took less than half an hour.

But once the server was back in its natural habitat, the problems began...

#POST

The "Power On Self Test" (POST) is the first checks a computer does to confirm whether all connected devices at least look reasonable. On my board, it consisted of 4 stages: CPU, RAM, VGA, BOOT. The first POST took an unnerving amount of time, long enough to make me think I'd bought a dud, and would loop the POST stage a few times. After a few minutes, it eventually stabilized, and the status LEDs flagged there was an issue with the GPU - which there shouldn't be, as I had 2 installed (I hadn't disconnected the old one yet, just in case).

It's been a while since I'd built a computer, and even longer since I'd upgraded one, but when doing fairly fundamental hardware swaps, there's a fairly important step I had forgotten about: Reset the CMOS. The CMOS is where all the persistent data from the BIOS lives. Resetting it clears it back to factory defaults, which are more likely to work with any given CPU (as it's not made any assumptions about the hardware yet).

After a few CMOS resets (ended up having to disconnect the battery rather than using the jumpers), and a few re-seats of the GPU (might be unrelated), eventually it would POST, and I could get the device booting.

Once Proxmox booted, which took longer than I was expecting, it wasn't appearing on the network...

#Network

Once proxmox booted up, I got the regular login prompt, which shows its IP, but I couldn't connect to the interface from my desktop. Worse still, it wouldn't even respond to ping, nor could it ping anything on my LAN or the internet. There were a few blinks of activity from the lights on the NIC, but nothing like normal levels.

When Proxmox boots up, you're met with a login terminal. This login terminal also gets used for some messages from the kernel, including every new virtual interface which gets created or destroyed. Every single docker container, LXC and VM on the machine lit up at exactly the same time, and made the terminal basically unusable. To make matters worse, at the time one of my containers had a boot looping problem, which meant every 30 seconds or so, it'd print out a few lines of logs. This is rather annoying in the terminal when trying to read config files to work out what's happening.

<fun-fact>

That message which pops up during initial login doesn't check the IP dynamically, it just shows whatever was available during install. Take a look at /etc/issue to see for yourself.

</fun-fact>

My initial step was ip a, to look at the interfaces and determine whether a new CPU resulted in a new IP address or something (which I knew didn't make sense, but worth checking). I could see the interface, and Proxmox's bridge, but the bridge didn't have any network details. The bridge is configured in /etc/network/interfaces, so that was the next step in my journey.

Opening the file (and dancing around the kernel messages), everything looked correct, besides 1 small detail. The bridge was setup to use port enp5s0 as its upstream, but ip a only showed enp6s0. A few lines of config change and a reboot, and everything was working.

Even now, I'm not sure why this happened (if you do, please comment below!). It might be something to do with the UEFI networking stack, but it's not caused me any further issues, and it's been reliably working after a handful of reboots.

And, as is just my luck, this is where things got even worse...

#Performance perils

Once Proxmox booted up, which took much longer than it normally did, everything felt rather sluggish. Sure the Proxmox web interface loaded fine, but all the VMs and LXCs inside took a long time to start up. Any of the applications took 3x longer to load than they used to - GitLab is a great example of this.

Now I know, GitLab isn't generally considered lightweight (unless you make some changes), but in my previous setup it would idle somewhere around 5% CPU usage in its LXC. Now, post upgrade, was as high as 50%. Before GitLab starts, it runs gitlab-ctl reconfigure to confirm everything looks right. This was taking almost 2 minutes to run (4x longer than usual), which systemd was considering a timeout and refusing to start.

CPU temperatures were no higher than before, sitting in the high 30s, so it definitely wasn't a heating issue. After rebooting and enabling DOCP, disabling EPU, even enabling a mild overclock, nothing seemed to help. Ryzen likes fast RAM, more so in the Zen 3 era, so ensuring the RAM was fast made sense, but it made no difference here. It's like somehow the 5600G was performing more like an i3 from 10 years ago than any of the reports or benchmarks I'd seen had shown. CPU frequency was sitting about right too, at 3.8 GHz...

... or so I thought. To go a little deeper, we need to talk about CPU governors.

#CPU Governors

The CPU governor is the part of the kernel which tells the CPU how to behave as far as dynamic clocking goes. Much like the old Intel advert says, it's designed to "Boost performance automatically", so when you need the power, it's there and ready, but when you down it can run much slower to conserve battery. In Windows, this is called a "Power plan".

Naturally, there are a few "profiles" to allow the user to configure how they want the governor for that device to work. It might be that power draw is a huge factor for them (perhaps they're running mostly from a battery), or perhaps they don't care and want as much performance as they can get. The profiles give that customization.

By default, Proxmox uses the "performance" governor, which allows the CPU to stay at its highest clock for much longer, without trying to scale down to conserve power (which isn't much of a concern in a data center). However for home users, this isn't ideal. Several months ago, I changed the governor to "powersave", to save some power (hence the name). I didn't really notice a difference at the time, but kept it just in case there was one I wasn't seeing.

And here's where the problem lies.

Unlike the "performance" profile, which pins the CPU to its highest clock, the "powersave" profile pins the CPU to its lowest clock. On my 3700X, this was clearly still high enough to function just fine. On my new 5600G, this was a face-melting 400 MHz, which is exactly why it felt like a 10 year old CPU - because it had the clock speed of one. Swapping the governor to "conservative" made an immediate and noticeable difference to performance, and my server was back!

This poses an alternative thought, in that I have no idea why my 3700X was able to perform as it did pinned to its lowest clock, but that's a problem I'm ok not knowing the answer to.

"conservative" works much more like I thought "powersave" did. "conservative" still allows the CPU to reach its full clock when it needs to, but conservatively brings the clock back down once it's done to keep power draw low, and leaves it there when it's not doing anything intense. "ondemand" (the default) is very similar, however doesn't bring the clock down quite as quickly. The Arch Wiki (obviously) has a great table talking about the available governors and configuration options. "conservative" allows the CPU to turbo nice and high when it needs to (such as CI builds, intensive page loads, backups etc), but stay nice and low during idle periods (like when I'm asleep) to conserve power.

#Power draw

The original drive behind this endeavor was to reduce the server's power draw to reduce my electricity bill, and hopefully gain an extra GPU in the process. For that to make sense, the break even point needs to be fairly soon. With an initial cost of £130, saving £10 a month would take just over a year to reclaim - not too bad. Reducing the power draw of my server reduces the dent it leaves in my electricity bill. Previously, my server drew around 80W, but now:

Just 60W!

My uneducated guess was around 60W (with exactly 0 data to back it up), so naturally I was pretty happy with the result. Saving 20W with 0 noticeable decrease in system performance, whilst gaining a GPU is a very good outcome in my books. Even when the GPU is active or I'm doing more intensive tasks, power consumption stays fairly low, even if a little spiky.

The reduced power draw does have a few other benefits. Less power means less heat, which lowers the temperature of the room its in (not necessarily a good thing given the time of year), but also means the fans can afford to spin slower, saving both noise and even more power.

Granted, the above graph is much more spiky than before, and even spikes much higher, the fact the average is much lower is the main point.

I think the next target on my list is to try and remove the HBA. Consolidating down my storage architecture and removing just a handful of drives (perhaps moving the boot drives to NVMe) could remove the HBA entirely. Given how warm that thing runs, there's probably some power savings in there too.

#Breaking even

At the time of writing, electricity prices are pretty insane right now. After some napkin math, I'm paying around £0.4/KWh. The 20W draw saving is therefore around £6.50/mo, giving me a break even time of exactly 20 months. Almost 2 years isn't quite as great as I would have hoped, but it's something.

Another way to bring forward the break even point is to sell my 3700X. Given the going rate, I could break even in less than 3 months!

Now that everything is working, I'm not looking at upgrading for a while. The platform is low power enough to suit my needs, but still has plenty of headroom should I want to expand. The 5600G is still a fairly new, well-supported CPU, which wipes the floor with most used Xeons at fractions of the power draw.

For now, both I and my wallet can rest a little easier, and my Jellyfin streams can load a little faster.